Introducing Large Neurosymbolic Cognitive Models

We are building the next generation of generalist AI agents that autonomously learn from observation, feedback, and experimentation.

We are building the next generation of generalist AI agents that autonomously learn from observation, feedback, and experimentation.

.png)

The future of AI isn't just about automating individual tasks – it's about creating systems that learn, adapt, and master new skills just like humans do. Today, we're excited to introduce a novel architecture for self-improving AI: Large Neurosymbolic Cognitive Models (LNCMs), representing the next evolution beyond reasoning LLMs.

LNCMs possess the ability to understand and reflect on their own capabilities and limitations while autonomously acquiring new skills. This enables LNCMs to learn and adapt based on feedback received through experiences and human interactions.

We’re proud to announce that the LNCM architecture achieves a new state-of-the-art result of 93% on the WebVoyager benchmark.

.avif)

The WebVoyager benchmark comprises 640 scenarios involving real, live websites. There were a number of time-dependent scenarios which were minimally updated manually. For example, if a scenario contained a date in March 2023, it was updated to the same date in March 2025. The “minimal” difference was the year, ensuring the integrity of the benchmark.

The evaluation of the agent utilized multiple human evaluators instead of the auto-evaluation method outlined in the original paper. This was to ensure that we were only evaluating the agent’s performance, and not the evaluator’s performance jointly with the agent.

Impressively, this state-of-the-art 93% success rate was achieved without the self-optimization feature. We expect to reach a 100% success rate with the self-optimization, as the system autonomously masters web browsing tasks.

Tessa outperforms Convergence’s Proxy at 82%, Emergence’s Agent-E at 73%, and H Company’s Runner H at 67%.

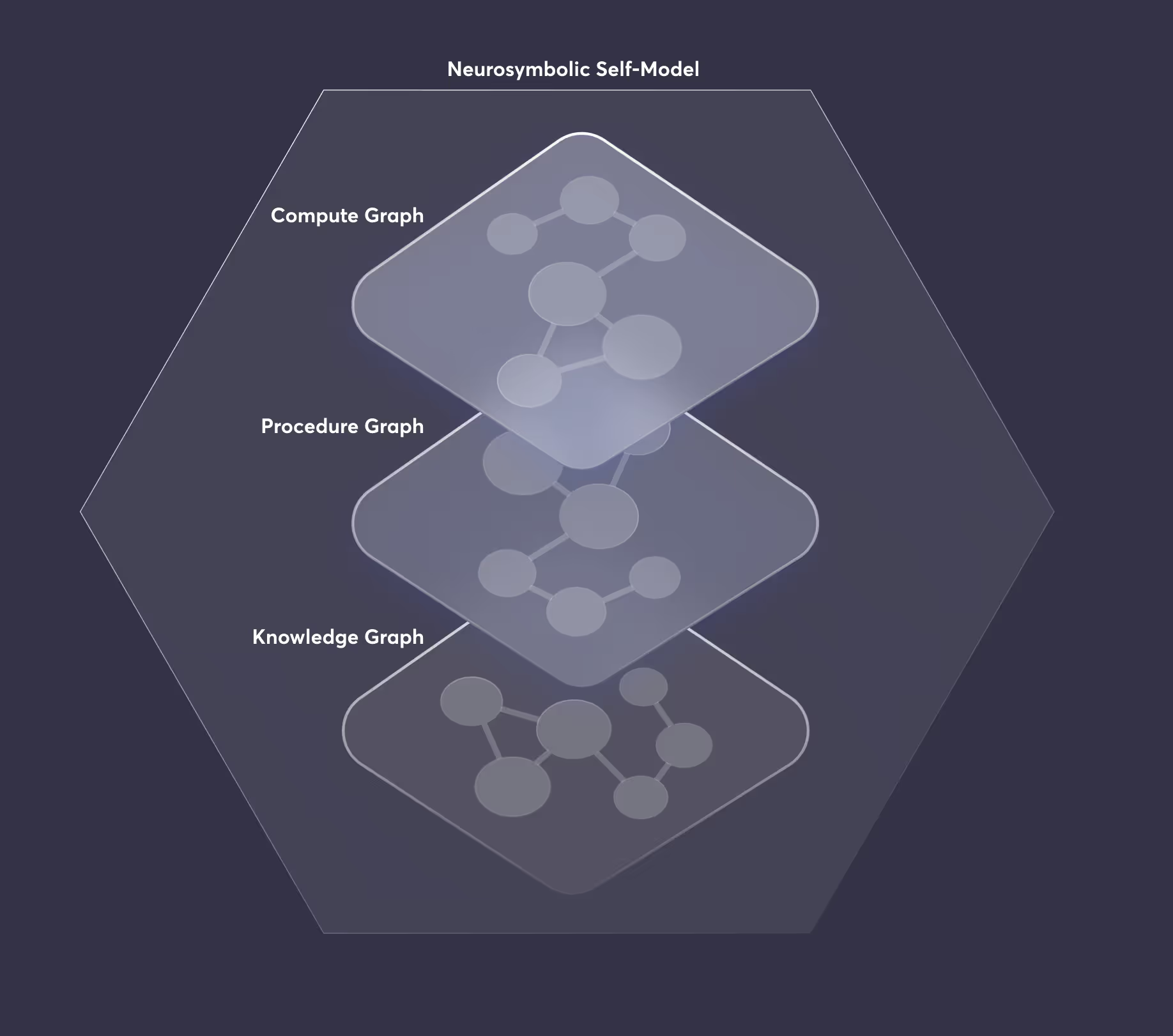

Tesseract takes inspiration from how the human brain processes information and learns from experience. It employs three interconnected graph structures, wrapped in a neurosymbolic metacognitive layer. Graphs are powerful as they allow us to structure data and operations while allowing for incremental improvements to the ontology—the system can reconfigure and optimize the graphs as necessary.

The three graph structures are encapsulated by a neurosymbolic self-model. This self-model gives Tesseract something akin to self-awareness – a comprehensive understanding of its own capabilities, limitations, and internal structures. Rather than relying on single-shot language model calls, as many current AI agents do, Tesseract actively monitors its knowledge and skillset, ensuring more reliable and grounded responses.

This self-model goes beyond mere monitoring: it enables Tesseract to reconfigure itself when faced with novel challenges that lie outside its existing capabilities. While tree search has become a popular mechanism for allowing AI agents to explore different actions and learn from their outcomes, Tesseract extends this concept to metacognition. It can search through different configurations of its cognitive graphs, effectively learning how to think differently when faced with new types of problems. This autonomous self-optimization, we believe, is a significant step towards fully generalizable superintelligence.

We're now putting this powerful technology to work across various industries, from financial analysis and consulting to real estate. If you have any suggested use cases in mind, sign up for our waitlist and let us know!